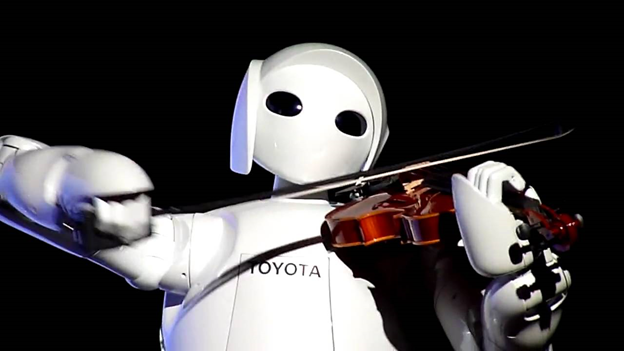

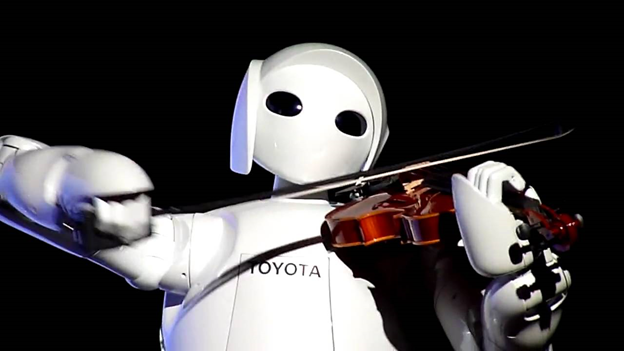

Toyota’s Violinist Partner Robot

The difference between robot “music” and human “music”

Many people have looked into incorporating technology and music, but it seems that technology is changing the way people interpret at listen to music. The way people listen to music has changed as technology’s integration with music produced many different genres. When people who are not musically trained are asked “What makes music good music?” you get many different forms of answers. People listen for a really good beat or if it overall sounds nice. They look for a recognizable and easy to remember melody that sounds “catchy.” When two artists sing the same song or do a remix of a song people will argue over who sounds better. They say that the better one sounds better or because one of the singers has a better voice. If it’s a remix people will say the beat sounds better or that the music is more “catchy.” With people who have had training in music it brings in a whole new way of listening to music. People who have had training in music listen to not only to the overall performance but take it in depth on how each part is executed. They look at how one part is performed and listen for phrasing or interpretation. In classical music it’s not about what the notes are, it’s about how they are played and that’s why musicians are able to differentiate between artists or even which country the music is from. If technology is added to music how much of the in depth analysis of music is lost? When technology is added to music is there still emotion in the music? I will be analyzing the difference between robotic music and music produced by humans. I will also be analyzing whether or not people are able to hear the difference between an ensemble full of musicians or an ensemble of just robots. In this paper I use Stuart S. Blume’s idea of letting people decide whether or not to use technology to show that it doesn’t really matter what is used but what is produced is what matters. I suggest that only a few people will be able to tell the difference between live musicians and robots. This is important because it shows the difference between the true nature of music and sound. This essay uses the works of Blume, Clark and Chalmers, Picard, Tarek, Wang, and Coble to analyze how technology affects music. Then the essay moves on to analyzing research gathered when people are exposed to music played by robots and music played by live musicians.

One of the most important literary works that I used is Stuart S. Blume’s “The Rhetoric and Counter-Rhetoric of a “Bionic” Technology” (1997) This is important because Blume talks about the theme of letting people having the freedom of choice. Blume writes about the deaf community and cochlear implants. He says that the deaf community should have their own opinion on using cochlear implants and that technology should be included when it is wanted and not forcefully using it. This applies to music because people should allow the use of technology if it is wanted. People should not be forced to use it or not use it. Another literary work that relates to technology is Andy Clark and David Chalmers’s “The extended mind.” In their paper, they talk about how the environment plays an active role in human cognition, which they call “active externalism”. Clark and Chalmers explain that humans create a two-way interaction which can be seen as a cognitive system. They call this a “coupled system” and say humans constantly create these coupled systems with objects in the environment like a cellphone, a calculator, or even a computer. This relates to music and technology and how humans are able to create music because technology can be the external “mind” of a human. This allows them both to create music since they are one. I also use an interview of Rosalind Picard who answers the question of “Can robots become human?” at the Veritas Forum at MIT in 2007. Picard works to give technology human emotion. She says that it is very difficult because the robot isn’t really “feeling” anything but rather being programmed and to show what it should be feeling. She says that they can program the robot to look happy but it isn’t “real” happiness. She says, “These are mathematical functions and processes that provide some of the roles we believe emotion plays in animals.” (203) This relates to music and technology because it shows that people have a very hard time of portraying emotion though the technology that they use for music. Another literary writing that is important is “Experimental Robot Musicians” by Tarek M. Sobh, Bei Wang, and Kurt W. Coble. They write about being able to build a robot that is capable of playing musical instruments. They say that one of the most difficult parts of building the robot is being able to portray musical expressiveness. They explain “by controlling the parameters involved in music expressions through computer-controlled/programmed mechanical entities, robot musicians are designed to bypass several technical difficulties that are typically encountered by human musicians.” They say that a robot musician could potentially be better than a human because of the accuracy of how it plays but also having features that a human doesn’t have.

I am analyzing if people can hear the difference between music played by a robot and music played by live musicians. This is important because it shows if playing the correct notes are good enough or if live musicians are able to produce something unique that robots are not able to do. To test this, I have chosen Baroque music by Jean Baptise Lully (1632-1687). J.B. Lully wrote a march called “Marche pour la cérémonie des Turcs” which can be roughly translated to a Ceremonial March for the Turks.

I have chosen Baroque music because it is more simple “Classical” music. Baroque music is easier to listen to because most of the melodic themes are repeated over and over throughout the piece with elaborate variations on them. It also follows a certain mood that will stay the same throughout the entire piece. Baroque music’s tonality and rhythm is easy to listen to because it stays close to the original presented theme. The march style is also more simple since it was mainly used for the military. It usually follows a style of a main theme with variations and also the development of the theme, which is usually done by wind and brass instruments. Another part of my research includes the machine “Sound Machines 2.0” by the company Festo. Their machine is made out of five self-playing instruments that listens to a performed melody then reproduces it and adds improvisation to it. They say “the individual acoustic robots are interlinked in such a way that they can listen to each other. This gives rise to constantly new variations, which differ from the original theme while retaining the essence of the composition.” In addition to this, Festo’s Sound Machines 2.0 is able to reproduce what baroque musicians would do with their pieces. Baroque music is heavily reliant on ornamented and improvised music. Festo’s Sound Machines is similar because the machines listen to the theme and recreate the theme while improvising on the theme.

I have been able to test my analysis by playing the original baroque march and Festo’s Sound Machines’ rendition of it. I had people with varying knowledge of music listen to the pieces and comment on them. The people that listened to the two recordings were: a student without knowledge of music, a student with little knowledge of classical music, a student who is studying music and planning to major in it, a student who listens to and deals with electronic music, and a person who has a master’s in music. These people were asked questions that are similar to “What were some of the musical characteristics that you heard from the first and second piece?” and “What were some of the differences between the two pieces?” I chose the music and questions because they were open to interpretations without having knowledge of music and didn’t give away the fact that a machine was involved in performing the music. Throughout the data it shows that the two people who have enough knowledge of music were more insightful but only one of them was able to tell the difference between the two recordings. Andy Clark and David Chalmers’s writing “The extend mind” helps to understand that humans and robots can work together. In their paper, they talk about how the environment plays an active role in human cognition, which they call “active externalism”, but I want to focus more on when they talk about “coupled systems.” This helps looking at robots playing music because the technology is part of the environment and it influences humans to create music out of it. This also shows an example of a coupled system. It could be seen as the human needs the robot to make music as a form of expression. Rosalind Picard helps understand the difficulty of accepting a robot’s performance as music because it is merely programmed to do so but the robot itself is not experiencing or expressing anything. Sohb, Wang, and Coble’s work “Experimental Robot Musicians” shows the difficulty of making the robot and also programming the smallest details that are hard to produce which can be like emotion. This also brings the possibility of robots being technically better than live musicians but they face the problem of adding details and musical expression.

Subject # | Experience Level | Comments |

1 | None | Sound machines: · Royal · sounded smoother Musicians: · More like a march · Lively |

2 | A little | Sound Machines: · like a piano with a pitch bender · round and lazy Musicians: · I like the first one better because it sounded much more livelier whereas the second recording was just kind of there, but they both sounded nice |

3 | Electronic music | Sound Machines: · sounds like brass instruments. · feels more somber and less uplifting. · Sounds like brass instruments but there might be a synthesizer there too. Musicians: · Festive and lively |

4 | Student majoring in music | Sound Machines: · Lighter · Different kind of brass instrument. · Relaxed Musicians: · More heroic |

5 | Masters Degree in Music | Sound Machines: · Not actual people playing. · Could hear very quiet clicking from robotic parts · No phrasing, just playing notes · Instruments didn’t sound right, sounded synthesized |

After listening to the two recordings I think Clark and Chalmers would think that they were both good and they were just two different interpretations. They would suggest that the machines were an extension of the person who created them so it’s their own interpretation through the machines. Clark and Chalmers would also suggest that the machines are an example of a coupled system because it needs the human to input the music while it reproduces it and delivers more for the human. Picard would think that the recording of the live musicians would be better because the machine recording is not able to put emotion into the music. She would say that the machines aren’t capable of experiencing what the ensembles of musicians are. They also wouldn’t be able to produce genuine feeling or emotion even if they are programmed to. The machine recording also shows the difficulties of what Sohb, Wang, and Coble write about. It shows how the machine is able to improvise while playing which is difficult for trained musicians. The Sound Machines also show that it is difficult to portray the musical expression of the Baroque period, which are the ornamented figures on the theme. The first person that I asked was a student who has no knowledge of classical music. The way that he described the two recordings were that the Sound Machines recording sounded royal and that the musicians ensemble sounded more like march and was very lively. He also said that the Sound Machines recording sounded a lot smoother. This is a very interesting observation because the piece is supposed to be a march but according to him only one of them sounded like a march. This observation seems like it would follow Picard’s way of thinking because it shows that the Sound Machines were not able to reproduce the march feeling that the musicians were able to portray. The second student who only knows a little about music described the two recordings by their instrumentation and the feeling of the two. He observed that the Sound Machines recording sounded like it had an electric piano with a pitch bender. He says that the recording of the musicians sounded more crisp and the Sound Machines recording sounded more round and lazy. He said that he liked the first piece better because it portrayed stereotypical pirate ship music. “The first one [the musicians recording] sounded much more livelier whereas the second recording [Sound Machines] was just kind of “there,” but they both sounded nice.” I think this interpretation would follow Clark and Chalmers because it was just a different interpretation from two different groups. The third person that I asked has a background of mixing electronic music, another musical genre. He described the musician recording as more festive and lively. He said that the Sound Machine recording sounded more brass like and gave the feeling of being more somber and less uplifting. He also gave a very interesting observation and said that the Sound Machine recording “sounds like brass instruments but it also sounds like there is a synthesizer there too.” This observation is really close to figuring out what was playing in the Sound Machines recording. This person’s observation would be more like Blume’s idea that technology should be used if the person wants to use it. The next person is a music major student. When he listened to the two recordings he said that the Sound Machines recording sounded lighter and more like a different kind of brass instrument. He observed that the musician recording sounded more heroic and that the Sound Machines sounded more relaxed. The last person that I had listen to the two recordings has a Master’s degree in music. Within the first few seconds of listening to the Sound Machines he said that there aren’t actual people playing. He noticed that there is a very quiet clicking from the robotic parts that were moving to play the notes. He said that there was no phrasing at all and the Sound Machines were just playing notes. He also observed that the instruments didn’t quite sound right and they sound synthesized. This would be like Picard’s idea but more of an extreme view. Out of the five people that were interviewed, only one of them was able to tell that one of the recordings was made by a machine and one of the people hinted at the fact it could be a synthesized instrument. With the data collected, it shows that the more musical experience that a person has it enables them to listen in depth to music. It seems as though to realize the true nature of music it requires a lot of knowledge in music. Subject 5 was the only person to look past the different instrumentation and listened to how it is played.

People have tried to incorporate technology into music but have created pieces that were somewhat similar. This changes the way people listen to music because it mixes the difference between music and sound. Technology has evolved music into many different genres that aren’t similar to each other. This is important because it gives music multiple meanings for people but doesn’t really define what good music is.

Blume, S. S.. "The Rhetoric And Counter-Rhetoric Of A "Bionic" Technology." Science, Technology & Human Values 22.1 (1997): 31-56. Print.

Clark and Chalmers 1998 “The Extended Mind”

Willard, Dallas, Daniel S. Cho, and Sarah Park. "Living Machines." A place for truth: leading thinkers explore life's hardest questions. Downers Grove, Ill.: IVP Books, 2010. 194-215. Print.

Sobh, Tarek M., Bei Wang, and Kurt W. Coble. "Experimental robot musicians." Journal of Intelligent and Robotic Systems 38.2 (2003): 197-212.

Festo’s Sound Machines 2.0 http://www.festo.com/net/SupportPortal/Files/156744/Brosch_FC_Soundmachines_EN_lo_L.pdf http://www.youtube.com/watch?v=XE1Mgo2ZimY#t=74

Jean Baptise Lully (1632-1687) "Cérémonie pour la marche des turcs" http://www.youtube.com/watch?v=Sy-yugPw_X8